Are robots just tools? Lee Chan believes that robots can become creators who inspire us. For the coexistence of robots and humans, it is necessary to establish a new relationship and the first step is to understand each other’s gaze.

The reason humans and robots coexist

Lee Chan focuses on the driving robot. The robot that can recognize the surrounded environment (Sensing), plan how to move (Planning), and put into the action (Acting). Lee Chan describes the robot as ‘AI that can be physically actualized at last’. The reason for inserting ‘at last’ is because there are still many things that robots cannot cover. It is more convenient for humans to walk and more efficient for robots to be equipped with wheels and roll out. Each has its own area of competence. The one thing that humans still do better than robots is ‘manipulation’. Humans spontaneously make judgement and manipulate when they encounter with unfamiliar situations. Robots plan and move under the situations the way they are trained. Robots and humans have evolved to do better on what they are good at in this world centered on humans. Robots and humans will grow at each of their fields and keep the mutually beneficial relationship. The future relationship between robots and humans is also important. Lee Chan focuses on relationships. He thought that understanding each other for a relationship was the first step.

The robot works by Lee Chan are equipped with laser sensors. It works the way of extracting information based on reflected light by shooting a laser at an object. The robot recognizes the depth of color and space with the laser and draws the world based on its interpretation. How does the robot that learns RGB and depth as 0 and 1 recognize the space and color? It is worried that how humans will appear in it. In the exhibition hall, the robot is repeatedly moving by an algorithm. There are pillars in the hall. LED lights are inserted into the pillars to emit light. The robot moves around the space and recognizes the light poles installed in the space. The robot expresses the distance to the pillar with color. As the robot moves through the space, it draws the space in different shapes at any moments. The drawings are projected back into the space with a projector for the audience to see them. Unlike the robots that have developed to understand the humans’ point of views, this is the work for humans to understand the robots’ point of view. This is a new gaze that we will have to become accustomed to and it is the beginning of our relationship with the robots.

The skeleton of the driving robot: Clearpath Robotics Dingo

The basic function of the robot that Lee Chan thinks is to recognize the surrounded environment, make a plan (route) and move. Dingo is the best experimental robot that can implement this. It is equipped with sensors that can help recognizing its surrounded environment and a computer that can help making plans.

In an era where the blurred boundaries between virtual world and reality due to the metaverse, moving robots are one of the tools that make us aware that we are still living in this real world. To move proves that we live in the present and the robots are a science that studies with the reality. This is the value of the robots.

Sensors close to the eyes: Intel RealSense

The robot’s sensors are innumerable. Among them, RealSense resembles the most to the human eyes. It can recognize the distance between objects using two lenses. The lens mainly used for game consoles that recognize human motions. Lee Chan used RealSense in the process of testing the work. However, as the image was projected using a projector, the space had to be dark and it was difficult to use the sensor that requires light. As the plan changed with the laser sensor, the visual image output also changed.

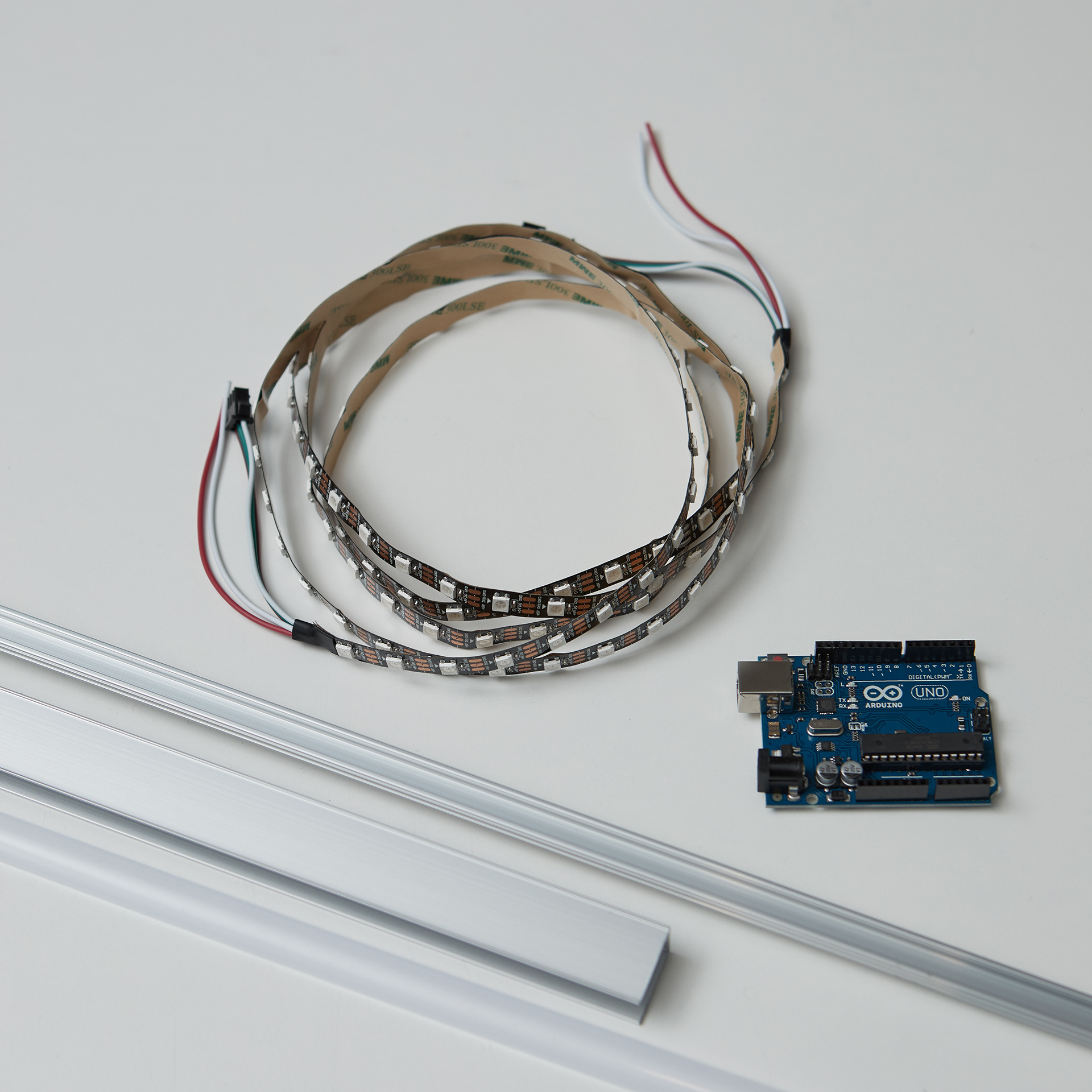

Minimal devices that connect the reality: LED pole

The LED poles installed in the space are objects that add the in-depth to the two-dimensional projector media. They help the robot and the audience communicate each other in a more three-dimensional way. Ultimately, the robot’s sense of distance is visually augmented and deliver it to people. People will be able to empathize more with the robot’s senses by the color added to the rather rigid robot’s perspective which recognizes objects as a binary number.

About the Artist

Lee Chan talks about the boundaries of the human image that change through technology including robots. He majored in mechanical engineering at KAIST and gained extensive professional experiences related to logistics robots at Naver Labs, Woowa Brothers, and Twinny. As a CEO of Floatic which develops mobile swarm robots and control software, he won ‘2021 KAIST Engineering Innovator Award’, ‘2014 Talent Award of Korea’, and ‘2014 Intel-ISEF Special Award Winner’.