How do we look when you feel when we are in love? Dutch writers Karen Lancel and Herman Maat analyzed brain waves to visualize feelings of love. They even analyzed the brain waves of the audience who watched the scene of making love.

Is there any worse situation than you cannot see the remote control of the air conditioner in the hot summer? Even criticized manufacturers that don’t have voice recognition in their air conditioners.

We must rely on input devices that always carry signals such as keyboards, mice, and remote controls when we operate a device. Beyond voice recognition and motion recognition technologies, BCI (Brain-Computer Interface) is developing that allows you to operate the device only with your thoughts. It’s a technology that connecting brain signal to computer directly. BCI consists of three stages: a signal measurement process that accepts signals from the brain, a signal conversion process that converts the signal into an electrical signal that the machine can understand, and a final output process that transmits the transformed and analyzed signal to the device. To fully transmit brain signals to devices, you must focus on thinking. This is because sudden changes or sudden actions can cause noise in signals.

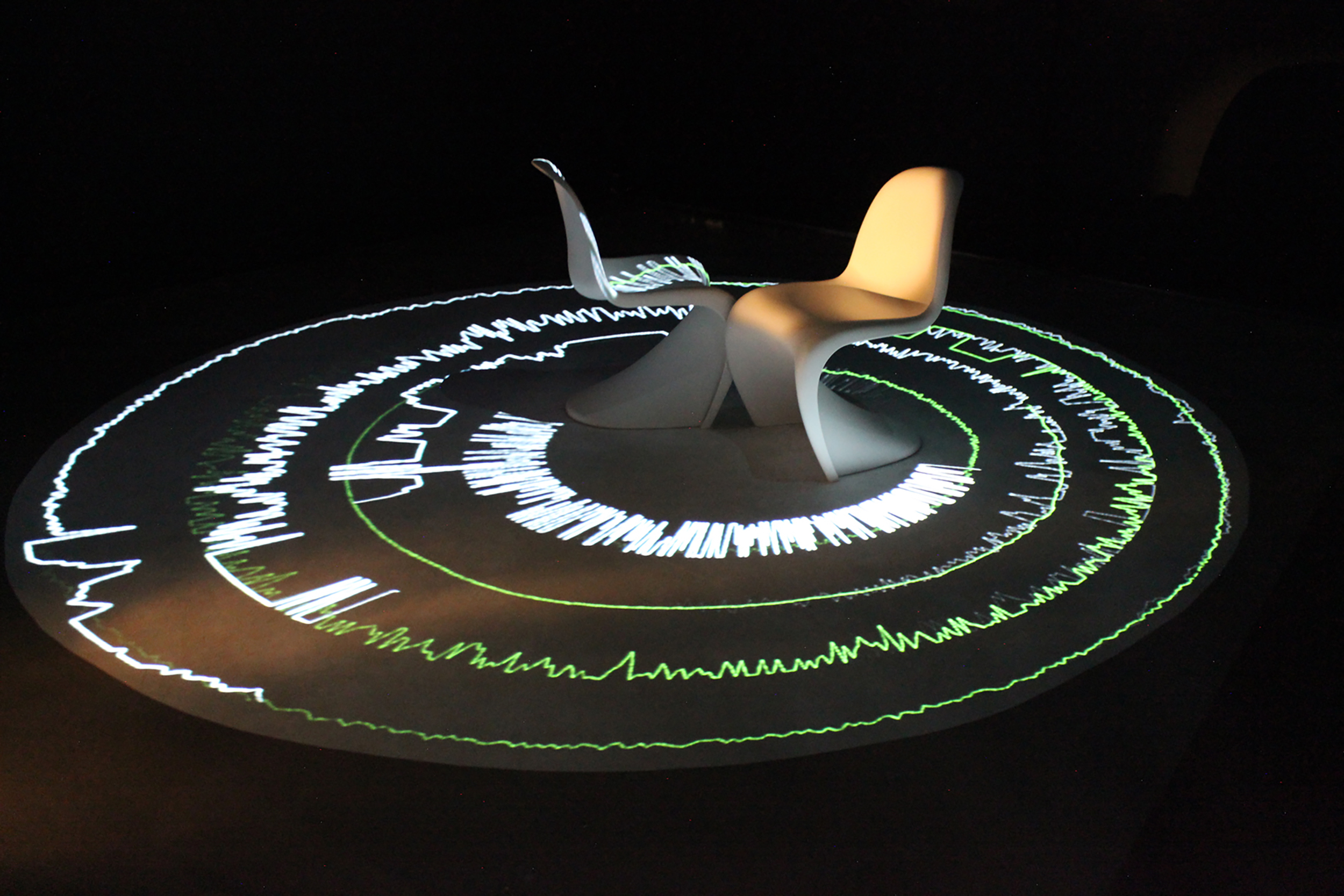

Dutch artists Karen Lancel and Herman Maat use violent brain signals in their work. The performance work “Shared Sense: Intimacy Data Symphony” measures the signals generated when humans touch each other. Two people sit in a chair in the middle of the stage wearing a wearable device for measuring EEG. Then we start hugging and kissing. It visualizes the brain signals generated at this time and shows them on the floor of the stage with a projector. The audience who watches this is also a participant of the work. The audience also wears a signal measuring device and watches the scene on the stage. You can see the brain waves of two people making love and the waves of people watching them resonate. The purpose of this work is simple. It’s to see if you can break away from physical touch and make love through computer communication.

Q. Is it possible to fully interpret emotions as biological signals?

A. Is there a way to fully convey your feelings? We don’t even know if we can convey 100% of our emotions in our language. We are observing how our emotions are transferred and changed through the Intimacy Data Symphony and the possibility of transforming our emotions into signals and sharing them with everyone. EEG data is generating from people kissing on stage. You can measure the signal by attaching electrodes to your scalp. We even started collecting EEG data from audience. I found a moment when the brain wave of the audience resonated with people in the stage. We were inspired by the results of this experiment and put it in our work “Symphony.”

Q. What is the main technology of this work?

A. We used BCI technology that converts brain signals into electronic signals. Research is highly active enough to use this technology to write. Until now, BCI research has focused on the interaction between people and computers in a one-on-one relationship. We have introduced multi-BCI technology that shares the people’s brain. It is the work of measuring the EEG of several people and analyzing their association. Using this technology, communication can be done only by just thinking. You can also play a game where you collect your thoughts and conduct missions. It can also be the basis of new communication technology that connects humans and computers, humans and humans.

In the work, only two actors physically touch each other. However, in the digital world, the actors and audiences’ EEG resonates, and at the same time, many people are touching each other.

Q. Is it possible for multiple people to make love digitally?

A. The word “group kiss” may feel negative. It is also inconvenient to quantify words such as “friendliness.” It was a problem to transmit emotions through digital signals. We collaborated with the audience to break the conventional wisdom digitally. Through these attempts, vague things have become clear. I visually confirmed the ambiguity, such as a sense of solidarity and consensus.

Q. What did you pay attention to while implementing the work?

A. I succeeded in visualizing my senses. People think they could know more specifically what it would feel like by watching videos made of signals. We thought about ways to maximize this emotion and added the task of converting signals into sound. We put on EEG data into sheet music algorithms. Each audience wears a headset and listens to the sound. Sound is produced based on the audience’s EEG data, and the audience hears different sounds.

Q. Please tell us about plans.

A. There are regions that hard to accept our work. However, I believe this work will have a positive social and technological impact. It can be used for a variety of sales, such as forming consensus with the socially underprivileged, a sense of community in the group, and tools for dialogue and reflection.

About artists

Karen Lancel and Herman Maat are a duo of artists and scientists who continue to present projects using Artificial Emotion (AE) and Brain Computer Interface (BCI). It proposes a new way to share human intimacy as an audience-participating installation work. They won the GAAP Global Art & AI Competition 2019 Award China, EMARE / EMAP Award and participated in the European Media Art Platform – European Union Creative Europe Program.